Part 1. The character's face is different every time, and it's a problem.

When generating images with AI, it is common for the eye color, hairstyle, and clothing design to change slightly each time. Especially when it comes to videos, there are times when you feel like "Who was this girl?" Consistency will greatly increase the viewer's immersion.

Part 2. Midjourney Edition

Midjourney has functions called "character reference (cref)" and "omni reference (omniref)" that allow you to reference character images.

◾️Character Reference (cref)

Fix a character with a single reference image. Can be used with Midjourney v6 and nijijourney niji6.

◾️Omni Reference (omniref)

Reference multiple images at once. Feature exclusive to Midjourney v7.

Specify a character sheet with the front face, full body, and back of the character you want to use. Entering the character's appearance information in the prompt will also make it easier to generate a more consistent character image.

Part 3. Stable Diffusion: Teaching a Character to "Stick" with LoRA

If you want to lock in a character’s appearance and ensure they show up consistently across multiple generations, LoRA (Low-Rank Adaptation) in Stable Diffusion is a powerful tool. Simply put, it allows the AI to "learn" the distinctive features of a character, so no matter how many images you generate, the same character keeps showing up with the same look.

To train a LoRA model, you’ll need to prepare around 30 to 50 reference images, tag them appropriately, and fine-tune them using a specialized tool. It takes a bit of effort, but it’s incredibly useful if your character appears in a long-running project or across multiple artworks.

You can teach the AI specifics like: “this girl has this hairstyle, wears this outfit, and often has this expression.” LoRA excels at capturing and reproducing such fine details, offering much greater consistency and reliability than other methods.

Part 4. Using Reference Images in Other AI Tools

Recently, more and more image generation AIs have introduced features that let you say things like, “Use this girl as the base.”

◾️ Flux.1 Kontext Max: Reads the character’s context from the image and incorporates it into the output

◾️ ChatGPT / Gemini: Shares a consistent world or style through image prompts

◾️ Runway: Maintains visual consistency of characters and backgrounds using the built-in Reference feature in Gen-4

Put simply, all of these tools let you say, “Here’s an image—make something with a similar vibe.” They’re especially useful for fine-tuning the look and avoiding inconsistencies in character design or atmosphere.

Part 5. Vidu Edition: Generate Videos with Consistent Characters

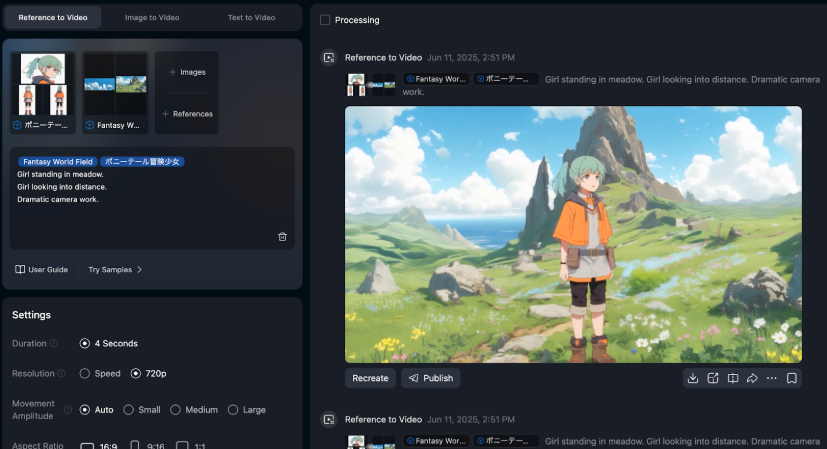

Vidu AI Video Generator offers a "Reference to Video" feature that lets you create videos from still images while keeping character consistency.

◾️ Normal Reference

Perfect for maintaining consistency in a single shot.

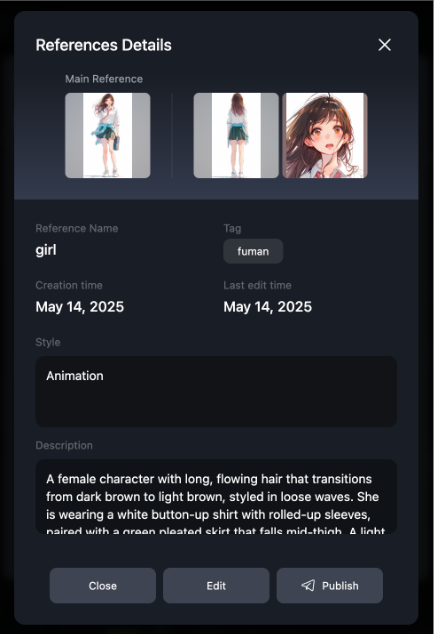

◾️ My Reference

Ideal for ensuring consistency across multiple shots. You can also define a character’s personality and behavior, allowing for not just visual consistency but also continuity in movement and overall mood.

By simply preparing a few reference images—such as characters, backgrounds, and props—you can easily generate videos with consistent characters. Since there's no need to manually create complex keyframes one by one, the production process becomes much more accessible.

(My Reference Settings)

(Reference to Video Generation)

Part 6. Techniques for Improving Consistency with Other Approaches

Character consistency doesn't have to rely entirely on AI tools—creative techniques in image and video editing can also make a big difference.

◾️ Refine Keyframes with Image Editing Software

Use tools like Photoshop to make small adjustments to character images generated by Midjourney or Stable Diffusion. You can also seamlessly place multiple characters into the same scene or blend assets created with different tools.

◾️ Composite in Video Editing Software

Insert character images generated by Vidu into background footage using chroma keying or other compositing techniques. This gives you more precise control over the overall look and flow of your video.

By combining these more hands-on methods with AI tools, you can achieve greater flexibility and a more polished final result.

Part 7. FAQs About Maintaining Character Consistency in AI Videos

Q1: Why do AI-generated characters often look different in each image or video frame?

A: AI image generation can produce slight variations in eye color, hairstyle, clothing, and facial expressions each time. In video production, these inconsistencies can break immersion and confuse viewers, making it crucial to use techniques that maintain visual continuity.

Q2: How can Midjourney help keep characters consistent across multiple images?

A: Midjourney provides:

- Character Reference (cref): Locks a character using a single reference image (v6 and nijijourney niji6).

- Omni Reference (omniref): References multiple images at once (v7), such as front, full-body, and back views of a character.

These features allow you to generate consistent character visuals for longer projects or multiple illustrations.

Q3: What is LoRA in Stable Diffusion, and how does it help with character consistency?

A: LoRA (Low-Rank Adaptation) allows Stable Diffusion to "learn" a character’s distinctive traits. By training a LoRA model with 30–50 tagged reference images, the AI can reproduce the same hairstyle, outfit, expressions, and other details across multiple images, ensuring visual continuity for long-term projects.

Q4: Which other AI tools support consistent character generation?

A: Several AI tools now allow image-based references for consistency:

- Flux.1 Kontext Max: Reads a character’s context from reference images.

- ChatGPT / Gemini: Maintains a consistent world or visual style.

- Runway Gen-4: Uses built-in Reference features to preserve character and background continuity.

These tools help reduce discrepancies in design, style, and atmosphere.

Q5: How does Vidu maintain character consistency in AI-generated videos?

A: Vidu’s Reference to Video feature allows creators to generate videos from still images while keeping characters consistent.

- Normal Reference: Maintains consistency in a single shot.

- My Reference: Ensures continuity across multiple shots, defining character personality and movement for cohesive scenes.

By providing a few reference images, creators can produce videos without manually creating complex keyframes.

Q6: Can traditional editing techniques improve character consistency in AI projects?

A: Yes. Combining AI with creative editing enhances results:

- Image editing software (e.g., Photoshop): Refine keyframes, adjust character images, and blend multiple characters into a scene.

- Video compositing: Use chroma keying or overlays to insert AI-generated characters into consistent backgrounds.

This hybrid approach ensures a polished, professional look across videos and illustrations.

Q7: What are best practices for achieving consistent characters in AI-generated content?

A:

- Prepare high-quality reference images (front, back, and full body).

- Use AI tools like Midjourney cref/omniref or Stable Diffusion LoRA for visual consistency.

- Apply compositing and editing techniques to refine images and video sequences.

- Maintain clear prompts and documentation for recurring characters across projects.